Mastering Structured Logging: A Comprehensive Guide

- With Code Example

- February 15, 2024

In today’s complex software ecosystems, application logs are invaluable. They allow developers to peek under the hood and uncover what’s really happening inside their systems. But traditional, unstructured log data leaves much to be desired. These messy text blobs fail to provide the full picture. To truly harness the power of logs, we need a more thoughtful approach - one that generates logs designed for consumption.

Table of Contents

Introduction:

This is where structured logging enters the scene. This modern logging paradigm provides the missing piece that unlocks deeper insights from application logs. Unlike opaque streams of text, structured logs embed important contextual metadata right in each log statement. This machine-readable data enables powerful searching, filtering, analysis and visualization.

With structured logging, troublesome bugs and performance issues never hide for long. Trends and anomalies in application behavior become far more visible. Parsing and ingesting log data into analytics tools becomes trivial. In short, structured logging brings sanity, understanding and transparency to the wild world of application logging.

For developers building complex, mission-critical software, structured logging is a must-have tool for observability. This blog post dives into the key concepts of structured logging and why it should be a best practice for engineering teams today. We’ll explore popular structured logging libraries for various languages and frameworks. By understanding structured logging, you’ll level up your ability to design, build and monitor applications that thrive under real-world conditions. The future of logging has arrived - let’s explore!

What is Structured Logging?

Structured logging is a modern approach to application logging that aims to make log data more usable. It works by having log statements that output data in a structured format, rather than unstructured plain text.

Instead of free-form messages, each log line contains key-value pairs and standardized fields. For example, a web request log could have fields like “timestamp”, “latency”, “request_id”, “user_id”, “endpoint”, etc. This provides crucial context about the log statement.

All the relevant metadata is right there in the log line itself, rather than buried in surrounding text. This machine-readable structure enables powerful searching, filtering, aggregation and visualization. Structured log data integrates seamlessly into data pipelines and analytics tools.

Benefits of structured logging:

The benefits of structured logging are huge. It makes debugging much faster when you can filter logs by request ID or user ID. Performance patterns and trends can be monitored through fields like latency or CPU time. Rate limiting and aggregation becomes trivial. Contextual data aids investigation of issues.

Overall, structured logging results in higher quality log data that provides greater insight into application behavior. It moves away from logging as an afterthought, towards logs that are optimized for consumption and value. Any serious application can gain observability superpowers through adopting structured logging best practices.

Setting Up Log Levels:

One key aspect of logging is setting appropriate log levels to control the verbosity of log output. Common log levels include DEBUG, INFO, WARNING, ERROR, and FATAL, each serving a specific purpose in conveying the severity of events. Configuring log levels ensures that only relevant information is captured, improving log clarity and reducing noise.

import "github.com/sirupsen/logrus"

// Configure logger with desired log level

logrus.SetLevel(logrus.InfoLevel)

How to Use a Logger Level Map:

A logger level map is a mapping between loggers and their respective log levels. This mapping allows developers to fine-tune log levels for different components or environments. By customizing log levels based on context, developers can prioritize critical information and suppress less important logs.

var loggerLevels = map[string]logrus.Level{

"module1": logrus.DebugLevel,

"module2": logrus.InfoLevel,

}

func getLogger(module string) *logrus.Logger {

logger := logrus.New()

logger.SetLevel(loggerLevels[module])

return logger

}

The Benefits of Using Different Log Levels for Different Environments:

Adopting different log levels for various environments—such as development, testing, and production—offers several advantages. In development, verbose logging aids debugging efforts, while in production, minimizing log verbosity conserves resources and simplifies log analysis. Tailoring log levels to each environment optimizes logging behavior and enhances operational efficiency.

Using Structured Logging:

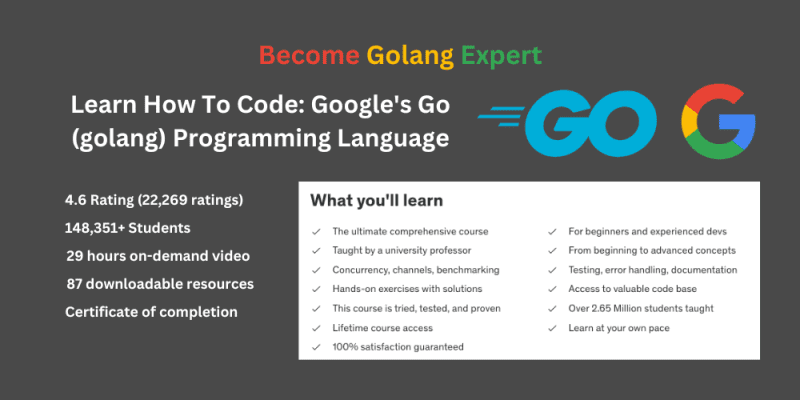

Implementing structured logging involves integrating a logging library that supports structured log formats. By leveraging libraries like Slog in Go, developers can seamlessly transition to structured logging and unlock its benefits. Structured logging libraries offer robust features for formatting, filtering, and enriching log data, empowering developers to gain deeper insights into their applications.

import "github.com/sirupsen/logrus"

// Example of structured logging with logrus

logger := logrus.New()

logger.WithFields(logrus.Fields{

"user": "john.doe",

"action": "login",

"success": true,

}).Info("User logged in successfully")

How to Implement Custom Log Handlers:

Custom log handlers extend the functionality of logging libraries by enabling developers to customize log output and behavior. Whether it’s adding additional metadata, sending logs to external services, or implementing custom log formatting, custom log handlers provide flexibility in tailoring logging solutions to specific requirements.

Contextual Logging:

Contextual logging enriches log entries with contextual information, such as request IDs, user identifiers, and timestamps. This additional metadata enhances log traceability and simplifies root cause analysis by providing crucial context about each log event. Integrating contextual logging with middleware allows developers to automatically inject context into log entries, streamlining log generation and analysis.

How to Use the Logger Middleware to Attach Contextual Information to Logs:

Logger middleware seamlessly integrates with web frameworks to capture request-specific information and inject it into log entries. By incorporating middleware into the request handling pipeline, developers can automatically append contextual data, such as request IDs and user agents, to log messages. This approach enhances log comprehensibility and facilitates correlation between logs and specific application interactions.

The Importance of Having a Request ID in Your Logs for Debugging Purposes:

Request IDs serve as unique identifiers for individual requests, facilitating request tracing and debugging in distributed systems. Including request IDs in log entries allows developers to trace the flow of requests across various components and identify bottlenecks or errors. With request IDs, troubleshooting becomes more manageable, as developers can correlate logs from different services and reconstruct the execution path of each request.

Storing Your Logs:

The way application logs are stored can make or break the usefulness of log data. Choosing the optimal storage solution requires evaluating factors like scale, speed, retention needs and access patterns. The days of simply logging to local text files are gone for serious production systems. Modern applications need robust backend infrastructure to harness logs effectively.

For small scale use cases, local filesystem storage provides simplicity and easy access. But as data volumes, users and infrastructure grows, limitations emerge in manageability, durability and aggregation abilities. This is where centralized logging services shine.

Cloud-hosted platforms like AWS CloudWatch Logs and Datadog provide automated, real-time log aggregation from diverse infrastructure. Elastic scalability, fine-grained access controls and powerful analytics capabilities enable actionable insights. However, costs also increase with data volumes.

Open source log stores like Elasticsearch, Kafka and Redis deliver similar benefits on-premises or across cloud environments. They can be integrated into a scalable logging pipeline. But they require expertise to operate, tune and secure.

Regulatory compliance should also guide log storage selection. Some frameworks like HIPAA mandate data protection, retention policies and access auditing that not all solutions fully support.

By evaluating their unique workload needs,DevOps teams can choose a log storage architecture for optimal utility. The ability to tail a local file provides simplicity, while cloud-based analytics unlocks hidden app insights. Blending approaches brings together the best of both worlds.

Recommendations for Where to Store Your Logs (File, Cloudwatch, Data Dog):

For small to medium sized applications, storing log files locally can be a good option. This allows for simplicity, especially in development environments. Log files can be tailed in real time and accessed directly for inspection. However, local log files become harder to manage as application scale and complexity grows.

Here is a simple example to log messages to a file in Go:

package main

import (

"log"

"os"

)

func main() {

// Open log file

f, err := os.OpenFile("app.log", os.O_APPEND|os.O_CREATE|os.O_WRONLY, 0644)

if err != nil {

log.Fatal(err)

}

// Set logger to log to file

logger := log.New(f, "", log.LstdFlags)

// Log messages

logger.Println("Starting application...")

logger.Printf("Log message: %s", "Hello World")

// Close file

f.Close()

}

The key steps are:

Open a file for writing using

os.OpenFile(). This will append and create the file if needed.Create a

log.Loggerthat writes to the opened file.Call logger methods like

Println()andPrintf()to log messages.Close the file when done.

This will log the messages to the app.log file. File rotation, log splitting and other enhancements can be added on top of this basic logging.

Cloud-based log aggregation services like AWS CloudWatch Logs become preferable for production systems at scale. CloudWatch offers automated log collection, highly scalable storage, and integrations with other AWS services. Useful features like monitoring, alerts, and streaming to other data platforms is available. Cost can be optimized through features like log expiration policies.

Here is an example of how to send application logs to AWS CloudWatch Logs in Go:

package main

import (

"github.com/aws/aws-sdk-go/aws"

"github.com/aws/aws-sdk-go/aws/session"

"github.com/aws/aws-sdk-go/service/cloudwatchlogs"

"log"

)

func main() {

// Create CloudWatch Logs client

sess := session.Must(session.NewSessionWithOptions(session.Options{

SharedConfigState: session.SharedConfigEnable,

}))

svc := cloudwatchlogs.New(sess)

// Log group and stream names

logGroupName := "myAppLogs"

logStreamName := "appLogStream"

// Create log group and stream if they don't exist

svc.CreateLogGroup(&cloudwatchlogs.CreateLogGroupInput{

LogGroupName: aws.String(logGroupName),

})

svc.CreateLogStream(&cloudwatchlogs.CreateLogStreamInput{

LogGroupName: aws.String(logGroupName),

LogStreamName: aws.String(logStreamName),

})

// Log messages

logger := log.New(os.Stdout, "", log.LstdFlags)

logger.Println("Application started")

// Send logs to CloudWatch

_, err := svc.PutLogEvents(&cloudwatchlogs.PutLogEventsInput{

LogGroupName: aws.String(logGroupName),

LogStreamName: aws.String(logStreamName),

// Log events added here

})

if err != nil {

logger.Printf("Error sending logs: %v\n", err)

}

}

The key steps are:

Create CloudWatch Logs client using AWS SDK.

Create log group and stream if they don’t exist already.

Log messages locally.

Send log events to CloudWatch using PutLogEvents API call.

This allows sending application logs to CloudWatch without managing file uploads. Other features like Kinesis stream ingestion, alarms, retention etc can also be configured.

Other hosted log management platforms like Datadog , Logz.io , SumoLogic , etc. also provide robust enterprise features for large or mission-critical systems. Advanced analysis, visualization, archiving capabilities and machine learning-powered alerting rules are common. They allow centralization of logs across on-prem, cloud, and hybrid environments. Cost varies based on data volume ingested.

Here is an example of how to send logs from a Go application to Datadog using their Go library:

package main

import (

"github.com/DataDog/datadog-go/statsd"

datadog "github.com/DataDog/datadog-go/v5/datadog"

)

func main() {

// Create Datadog client

ddClient, err := datadog.NewClient("<DATADOG_API_KEY>", "<DATADOG_APP_KEY>")

if err != nil {

log.Fatal(err)

}

// Create statsd client

statsd := statsd.New("127.0.0.1:8125")

// Log some metrics

statsd.Gauge("requests.count", 100, []string{"version:1.0"}, 1)

// Send logs

ddClient.Logs.Send(&datadog.LogsPayload{

Series: []datadog.LogsSeries{

{

Context: "application",

Source: "go",

Service: "app",

Status: datadog.LogsStatusInfo,

Tags: []string{"env:dev"},

Logs: []datadog.LogsMessage{

{

Message: "App started",

Timestamp: datadog.CurrentEpochTime(),

},

},

},

},

})

}

The key steps are:

Create Datadog client using API and app keys.

Create Statsd client for custom metrics.

Send metrics and logs to Datadog via client.

This allows sending both metrics and logs from a Go app to Datadog’s platform for monitoring and analytics.

For microservices architectures, a distributed logging pipeline is recommended. Each app logs locally and streams to a central aggregator like Kafka or Fluentd. This avoids central dependency or bottleneck. Open source options like Graylog and ELK stack work well here.

In summary,evaluate factors like scale, cost, team skills, and regulatory requirements when choosing log storage solutions. Start simple with local files, then evolve to cloud services or self-managed platforms as needed. This balances ease of use with robustness for long-term log management as systems grow.

Conclusion:

Structured logging represents a paradigm shift in logging practices, offering enhanced readability, searchability, and analysis capabilities. By adopting structured logging and leveraging tools like Slog and logger middleware, developers can streamline log management, gain deeper insights into application behavior, and expedite troubleshooting efforts. Embracing structured logging empowers developers to unlock the full potential of their log data and elevate their application monitoring and debugging workflows.

Recap of the Key Points

Structured logging organizes log messages into a standardized format, enhancing readability and analysis.

Setting appropriate log levels ensures that only relevant information is captured, optimizing log clarity and efficiency.

Using structured logging libraries like Slog in Go facilitates seamless integration and unlocks advanced logging features.

Contextual logging enriches log entries with additional metadata, facilitating troubleshooting and root cause analysis.

Choosing the right log storage solution depends on factors such as scalability, accessibility, and compliance requirements.

Related Posts

Simplify Your Debugging Process With Powerful Golang Logrus Features

In modern software development, effective logging plays a crucial role in understanding and monitoring application behavior. For Golang developers, Logrus stands out as a powerful logging library that offers a wide range of features and capabilities to enhance the logging experience.